Data Loss Prevention Software

This article looks at Data Loss Prevention (DLP) software commonly used in financial organisations and how these impact open source consumption and contribution. It is not a complete reference for the subject of DLP generally, but should act as a starting point for understanding the issues involved.

Data loss prevention (DLP) software detects potential data breaches/data ex-filtration transmissions and prevents them by monitoring, detecting and blocking sensitive data while in use (endpoint actions), in motion (network traffic), and at rest (data storage). - Data Loss Prevention Software, Wikipedia

Other Useful Terms

Security Information and Event Management (SIEM): real-time monitoring and analysis of security alerts generated by network devices, servers, and applications.

Security Orchestration, Automation, and Response (SOAR) software that helps security teams automate and streamline their incident response workflows.

Types of DLP Software

There are four basic types of DLP software, which are worth covering here:

Endpoint: data in use DLP solutions are installed on an end-user machine (say a laptop or PC) or a server. This works by monitoring and controlling the flow of data on individual endpoints. The solution scans files and data in real-time, looking for sensitive information (e.g. social security numbers, credit card numbers, or confidential business data). If it identifies sensitive data, it can either block the data from being transferred, encrypt it, or apply other protective measures, based on the policies and rules defined by the organization.

Cloud: Modern enterprises are increasingly turning to the cloud for data storage (data at rest) and processing (data in use). This presents another venue for data loss to occur. Cloud-based DLP (such as Google Cloud DLP) allow you to configure which data is sensitive in your data stores and then mask that data when it is used in queries.

Network: data in motion DLP solutions usually involve Firewalls set up at egress points at the perimeter of the network (see below).

Source Control DLP: some DLP tools focus specifically on open source contribution DLP (see below).

This article focuses on Network DLP and Source Control DLP, since this often interferes with open source consumption and contribution.

Firewalls for Network DLP

In computing, a firewall is a network security system that monitors and controls incoming and outgoing network traffic based on predetermined security rules. A firewall typically establishes a barrier between a trusted network and an untrusted network, such as the Internet. - Firewall, Wikipedia

In a corporate environment such as a bank, there are usually firewalls placed on both incoming and outgoing traffic. The firewall scans incoming and outgoing data and attempts to set rules about what is and isn't allowed as traffic, much as a security guard can allow or prevent people from entering a building.

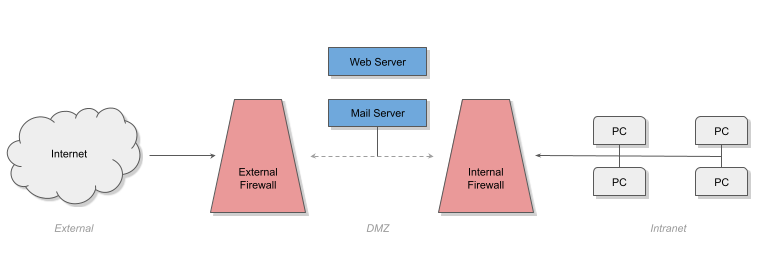

Incoming requests from external clients to an organisation's servers (such as requests for web pages, or for delivery of email) are usually routed to machines in the DMZ (or demilitarized zone). Meanwhile, the intranet contains most of the bank's private hardware including the PCs of the bank's staff.

Staff connecting from home will usually access the intranet (as though they were in the building) via a Virtual Private Network (VPN).

Sometimes, financial institutions will have further firewalls to divide their network further, say between the general intranet and production systems. A good analogy for this might be a "VIP" area in a night club which has its own security beyond the main front door.

Filtering / Security

Messages from one machine to another are sent across both the intranet and internet in packets. For messages cross from one to the other, they will have to go through the firewall.

The aim of the firewall is to filter packets as they pass through, only allowing benign packets to pass. Over time, there has been considerable evolution in the functionality of firewall technology which we will summarize here:

Packet-Address Based (1980s-) . The address component of a networking packet is standard to all networking traffic. The first firewalls simply maintained a list of allowed destinations for packets to be sent to. This could be used to prevent staff from accessing a particular web site at a given address.

Connection Based (1990s-). A second generation of firewalls performed more analysis and traced the back-and-forth of packets through the firewall to establish bi-directional packet exchange between two machines (a connection). This allowed policies to be tailored based on who was communicating, and therefore add allow- or deny-lists.

Deep-Packet Inspection/Next Generation (2010s-). The next step for firewalls was to inspect the contents of the packets being sent on the network (made more complex since most content on the internet is now encrypted). At a simple level, this allows the firewall to be able to filter based on a particular web page within a site, or filtering out specific types of action (e.g.

POSTed data denied butGETrequests for data are allowed).

DLP and Open Source

Internal firewalls have been used as a control for Data Leakage Prevention (DLP). By removing access to sites such as Google Docs, or popular social networking sites, compliance teams are able to remove a certain vector of (mainly accidental) data exfiltration.

Sites like GitHub are often included in deny-lists, since data can be posted to them. This prevents developers from viewing a large amount of open source software.

Sites like StackOverflow (a question-and-answer site for developers) are also often included in deny lists, because they too can have data posted to them, and have a strong social component. Being unable to access sites like this is a serious impediment to the effective use of open source software.

DLP software is effective against casual or accidental exfiltration. However, anyone maliciously exfiltrating data can send it to an almost unlimited number of destinations on the internet and can employ encryption techniques to hide the data payload. Dealing with this risk requires controls on data access rather than at the firewall level.

Pattern Matching

A workaround for this is that some organisations can use Deep Packet Inspection Firewalls to create much more complex rules around the contents of data.

For example:

- With complex templates, you can allow GitHub to be viewed by certain subsets of people. In many organisations, developers have to request access to GitHub (i.e. be added to the allow list patterns for the site) in order to be able to view it at all.

- Some organisations craft rules to allow staff to view content but not post to StackOverflow.

Tools supporting pattern matching include:

Machine Learning

Tools based on simple allow- and deny- lists are broken, and more complex pattern matching can become very cumbersome:

Security analysts get fed up with having to manually chase large numbers of false positive incidents that require deep and time-consuming investigations. - Mediocre DLP Solutions Fall Short on Data Detection, Palo Alto Networks

To combat this situation, advanced machine learning is being used for Deep-Packet Inspection in newer tools:

... to combat this situation, advanced machine learning is the present and the future of data protection because it makes data identification more accurate and simplifies detection. Mediocre DLP Solutions Fall Short on Data Detection, Palo Alto Networks

Tools Include:

Data Fingerprinting

A second technique is to fingerprint the private data within the organisation and use these fingerprints in the firewall to look for private data being exfiltrated:

In addition, it should leverage Exact Data Matching (EDM) to detect and monitor specific sensitive data, and protect it from malicious exfiltration or loss. Designed to scale to very large data sets, EDM fingerprints an entire database of known personally identifiable information (PII), like bank account numbers, credit card numbers, addresses, medical record numbers and other personal information stored in a structured data source, such as a database, a directory server, or a structured data file such as CSV or spreadsheet. This data is then detected across the entire enterprise, as it traverses the network edge or it is transferred by employees from remote locations. - A Reliable Data Protection Strategy Hinges Upon Highly Accurate Data Detection, Palo Alto Networks

Here are some tools that support data fingerprinting:

Summary

This article describes how DLP software works in the enterprise, focusing on firewalls, and how this can be a barrier to contributing to open source.

For more details on how to effectively contribute to open source given these constraints, please see the article about Publication

Further Reading

- DLP Best Practices For DLP Implementation - By SunnyValley